The development of Data Analytic allows to exploit collected and accessible data to produce data hints: descriptive analysis, prescriptions, predictions, early warning, anomaly detections, suggestion, heatmaps, recommendations, decision support, routing, classification, detection, video processing, etc. Most of these processes can use ML, AI, XAI, NLP, operating research, statistic techniques and any kind of libraries.

For each Data Analytics one should provide answers to the following questions:

- Which kind of hints I have to develop/produce? Which is my target goal?

- Where are these hints produced?

- How much often I have to execute?

- I have a training and execution phases?

- Which is the best language to exploit certain libraries and AI/XAI models?

- How to assess the quality of what I am going to produce? Which metrics I have to use?

- Etc.

In Snap4City, there is a specific tutorial for the Data Analytic development with several examples:

https://www.snap4city.org/download/video/course/da/

we also suggest reading the Snap4City booklet on Data Analytic solutions.

https://www.snap4city.org/download/video/DPL_SNAP4SOLU.pdf

In the following there is a code example in Python.

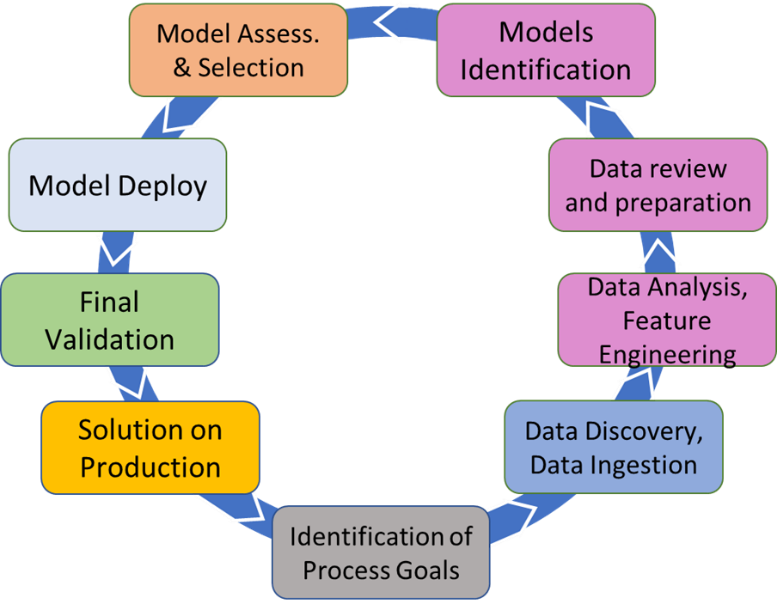

For Model/Technique Development/testing

- Identification of Process goals and Planning

- Which goals.

- How to compute, which language.

- Which environment, which libraries.

- Data Discovery and Ingestion (from the general life cycle) (as above presented that can be given for granted).

- Data Analysis: feature engineering, feature selection, feature reduction.

- Data review and preparation for the model: management of encoding if necessary, addressing seasonality if needed, data imputation, noise reduction, etc.

- Model Identification and building: ML, AI, etc.

- Training, Setting ranges and tuning hyperparameters when possible.

- Model Assessment and Selection

- Assessment on a set of metrics depending on the goals: global relevant and feature assessment.

- Validation in testing.

- Global and Local Explanation via Explainable AI, XAI, techniques.

- Assessing computational costs.

- Impact Assessment, Ethic Assessment and incidental findings.

- Model Deploy and Final Validation

- Optimization of computation cost for features, if needed reiterate

- Solution on Production

Figure: Data analytic, machine learning, AI, XAI life cycle

The developed DA processes may be activated by a Processing Logic (IoT App) which also passes some parameters for the computations. Classical parameters are the references (ServiceURI) of the data and context on which the DA has to be applied. The results can be directly saved into the storage from the DA or can be passed back to the calling Processing Logic (IoT App) for further processing. The Processing Logic (IoT App) management of DA is strongly appreciated by data scientist since it is very useful when several different learning instances need to be launched in parallel to perform DA Model selection and tuning by using different parameters.